HONG KONG, June 27, 2023 /PRNewswire/ -- The tiny visual systems of flying insects have inspired researchers of the Hong Kong Polytechnic University (PolyU) to develop Optoelectronic Graded Neurons for perceiving dynamic motion, enriching the functions of vision sensors for agile response.

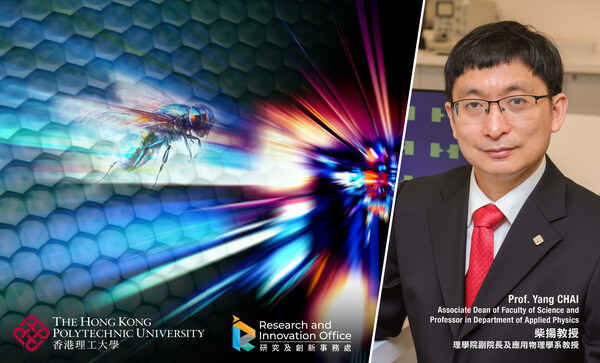

Led by Prof. Yang CHAI, Associate Dean of Faculty of Science and Professor in Department of Applied Physics at the Hong Kong Polytechnic University (PolyU), the research team has been inspired by the tiny visual systems of flying insects to develop Optoelectronic Graded Neurons for perceiving dynamic motion, enriching the functions of vision sensors for agile response.

Biological visual systems can effectively perceive motion in a complicated environment with high energy efficiency. Particularly, flying insects have high flicker function frequency (FFF) and could perceive objects with high motion speeds. This nature inspiration leads to advancing machine vision systems with very economical hardware resource. Conventional machine vision system for action recognition typically involves complex artificial neural networks such as "spatial" and "temporal" stream computation architectures.

Led by Prof. Yang CHAI, Associate Dean of Faculty of Science and Professor in Department of Applied Physics at PolyU, the research team showed that Optoelectronic Graded Neurons can perform high information transmission rate (>1000 bit/s) and fuse spatial and temporal information at sensory terminals. Significantly, the research finding empowers the functionalities that are unavailable in conventional image sensors.

Prof. CHAI, said, "This research fundamentally deepens our understanding on bioinspired computing. The study finding contributes to potential applications on autonomous vehicles, which need to recognize high-speed motion on road traffic. Also, the technology may be used for some surveillance systems."

Bioinspired in-sensor computing

Machine vision systems usually consist of hardware with physically separated image sensors and processing units. However, most sensors can only output "spatial" frames without fusing "temporal" information. Acute motion recognition requires "spatial" and "temporal" stream information to be transferred to and fused in the processing units. Therefore, this bioinspired in-sensor motion perception brings the progress in motion processing, which have been a computational challenge with considerable demands on computational resources.

The PolyU research "Optoelectronic graded neurons for bioinspired in-sensor motion perception" is published on Nature Nanotechnology (20 April 2023). The research team has focused on studies on in-sensor computing to process visual information at sensory terminals. In other previous studies, the team demonstrated the contrast enhancement of static images and visual adaptation to different light intensities.

Prof. CHAI, noted, "We have been working on artificial vision for years. Previously, we only used sensor arrays to perceive static images in different environments and enhance their features. We further look into the question whether we can use a sensor array to perceive dynamic motion. However, sensory terminals cannot afford complicated hardware. Therefore, we choose to investigate the tiny visual systems such as those of flying insects which can agilely perceive dynamic motion."

Flying insects such as Drosophila with a tiny vision system can agilely recognize a moving object much faster than human. Specifically, its visual system consists of non-spiking graded neurons (retina-lamina) that have a much higher information transmission rate (R) than the spiking neurons in the human visual system. The tiny vision system of insect greatly decreases the signal transmission distance between the retina (sensor) and brain (computation unit).

Essentially, the graded neurons enable efficient encoding of temporal information at sensory terminals, which reduces the transfer of abundant vision data of fusing spatiotemporal (spatial and temporal) information in a computation unit. This bioinspired agile motion perception leads to the research team to develop artificially Optoelectronic Graded Neurons for in-sensor motion perception.

Highly accurate motion recognition

High accurate motion recognition is essential for machine applications such as for automated vehicles and surveillance systems. The research found that the charge dynamics of shallow trapping centres in MoS2 phototransistors emulate the characteristics of graded neurons, showing an information transmission rate of 1,200 bit s−1 and effectively encoding temporal light information.

By encoding the spatiotemporal information and feeding the compressive images into an artificial neural network, the accuracy of action recognition reaches 99.2%, much higher than the recognition achieved with conventional image sensors (~50%).

The research unleashes challenge in motion processing which demands considerable computational resources. Now, the artificially graded neurons enable direct sensing and encoding of the temporal information. The bioinspired vision sensor array can encode spatiotemporal visual information and display the contour of the trajectory, enable the perception of motion with limited hardware resources.

Getting inspiration from agile motion perception of the insect visual systems, the research brings significant progress in the transmission speed and processing of integrated static and dynamic motion for machine vision systems in an intelligent way.

About the research team

Collaborated by scientists from The Hong Kong Polytechnic University, Peking University of China, and Yonsei University of South Korea, the research output has been published in the international journal "Nature Nanotechnology."